基于分词的朴素贝叶斯分类器的构造和应用 毕业论文+任务书+开题报告+文献综述+外文翻译及原文+Python代码及词库

基于分词朴素贝叶斯分类器的构造与应用

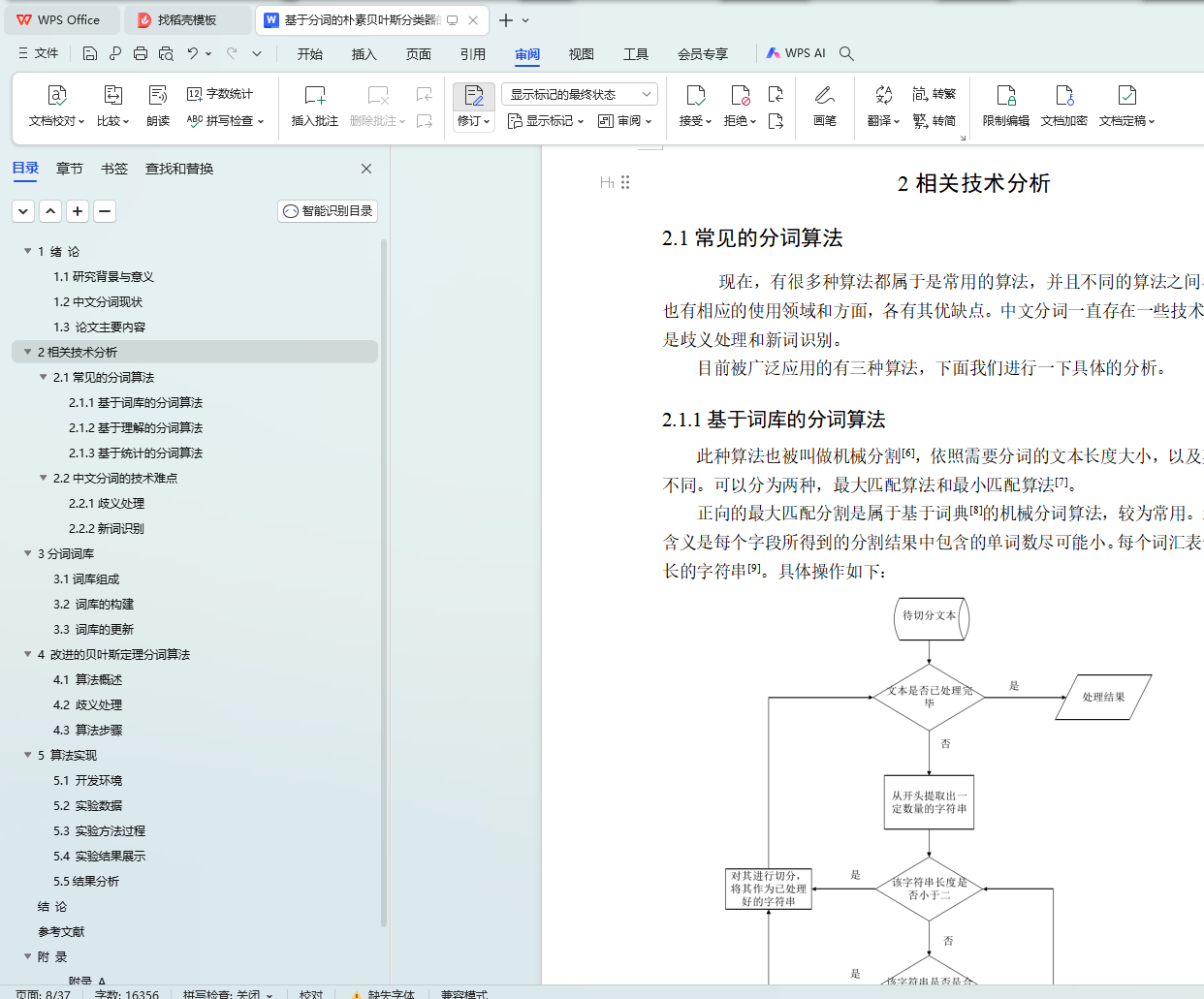

【摘要】 众多国际国内外学者和研究人员在近几年来的努力下,现以形成了多种中文分词算法,目前主要的算法有基于词典、基于理解和基于统计的三类,实际运用时经常相互结合使用。目前的系统都主要以这三类算法为主。而众所周知汉语有着相当的复杂性,新词也随时代变迁也在不断涌出,目前这三类算法并不能更好地满足我们实际生活的需要。

因此本文在这些算法的基础上,提出基于贝叶斯网络构建分词模型,在模型框架下一并完成交叉、组合歧义等等。经过运算和处理以后,能够让新的算法可以整合现有的常用算法的长处,规避他们的缺陷,有效提高分词的效率。

在测试条件下的检测结果表明,新算法进行分词的效果要较好,能够很好的对歧义进行处理,进行未登录词的处理,可以很好的满足对各种中文相关信息的处理需求。

【关键词】 中文分词,贝叶斯分类器,建立词库

Construction and Application of Naive Bayesian Classifier Based on Word Segmentation

【Abstract】 With the efforts of many scholars and researchers at home and abroad in recent years,many Chinese word segmentation algorithms have been formed. At present,the main algorithms are dictionary-based,comprehension-based and statistics-based. They are often used together in practice. Current systems are mainly based on these three kinds of algorithms. As we all know,Chinese has considerable complexity, and new words are constantly emerging with the changes of the times. At present, these three kinds of algorithms can not better meet the needs of our real life.

Therefore,on the basis of these algorithms, this paper proposes a word segmentation model based on Bayesian network components,and completes crossover, combination ambiguity and so on under the model framework. After these processing, the algorithm can fully absorb the advantages of various common algorithms,avoid their limitations, and effectively improve the efficiency of word segmentation.

The test results show that the new algorithm performs better in word segmentation. It can process ambiguity and unlisted words well. It can satisfy the processing requirements of various Chinese related information.

【Key Words】 Chinese word segmentation,Chinese word segmentation,Building Thesaurus

目 录

图目录

表目录